Part 6: Managing Secrets with Vault, Kubernetes Starter

Written August 23rd, 2024 by Nathan Frank

Photo source by BoliviaInteligente on Unsplash

Recap

This article picks up from the fifth article: Kubernetes and Orchestration in the Kubernetes Starter series.

Know about Managing Secrets with Vault? Skip to Helm and Environments.

Vault

Secrets are vital to the security of applications controlling the authentication that allows one system to talk with another. Managing secrets creates real organizational issues:

Who has access to each of them?

Who can change them?

Who can read them?

How often do they change?

How does one person know that another has changed the value?

If there's been a breach, how do we manage re-securing the systems?

Vault provides a centralized way to manage secrets and handle user accounts/roles to allow for different people to have different access to just the secrets that they need. There are cloud resources that perform similar roles like: AWS KMS, GC KMS, and Azure Key Vault.

Hashicorp Vault has a community available offering (which we'll be using) as well as enterprise service upgrades. The last year has seen some changes in the open source licensing of Hashicorp so it may make sense to consider migrating to OpenBao. This series will continue with Vault.

This is great to solve keeping them in one place and managing them from there, but it goes further with automatic updates of the secrets in a Kubernetes cluster along with an audit trail.

These tools, especially when enhanced with dynamic secrets (short lived, programmatically rotating secrets), help to reduce the impact if a secret is compromised.

Infrastructure as Code, but be mindful with secrets

Along this process we've been careful about checking in any real secrets into the codebase as it can create security risks. There's configure.sh scripts to ask for values for secrets to be entered to simplify the manual process of updating secret values.

While some of these aspects could be fully automated and checked in with IaC, we look to reduce secrets within a codebase for security.

Vault clients

Vault can be managed in three ways:

One can login to a specific vault instance and then run vault commands. This is fine for local testing, but then scripts must be created to be run on the vault instances.

By leveraging the API, scripts can still create the resources we need, but they do not need to be run locally making it easier to run from a CI/CD process.

We'll use the API to create most of the objects we need, and then use the Vault UI to update the secret values that will get auto applied to Kubernetes. IaC methods do not recommend leveraging the Vault UI to configure the majority of the objects as it's not replicable.

Note that one can also create Vault resources using the Vault Terraform Provider. For this series we are not adding in another technology layer with Terraform at this time.

Vault Injector

Vault injector is one way to automate the update of secrets flowing out to pods that need to leverage them. It leverages a sidecar container in each pod to watch for changes and then provides the secrets as env variables through a file mount which is picked up by the workload container on the pod.

In the diagram, the Vault Secret Injector pod manages when new Vault Injectors should be applied. The Vault Injector containers are sidecars that run in the same pods as the app workload and take the secrets in Vault and expose them as a file mounted into the app pod.

From a scalability perspective this poses concerns as even a small amount of resources for injector pods adds up when running hundreds of pods, lowering the amount of resources that could be performing work and creating more points of failure. This creates 2n containers and n+1 pods (unless scaling for HA).

This diagram shows how as we scale to three applications the sidecar containers scale as well.

Vault Secret Operator

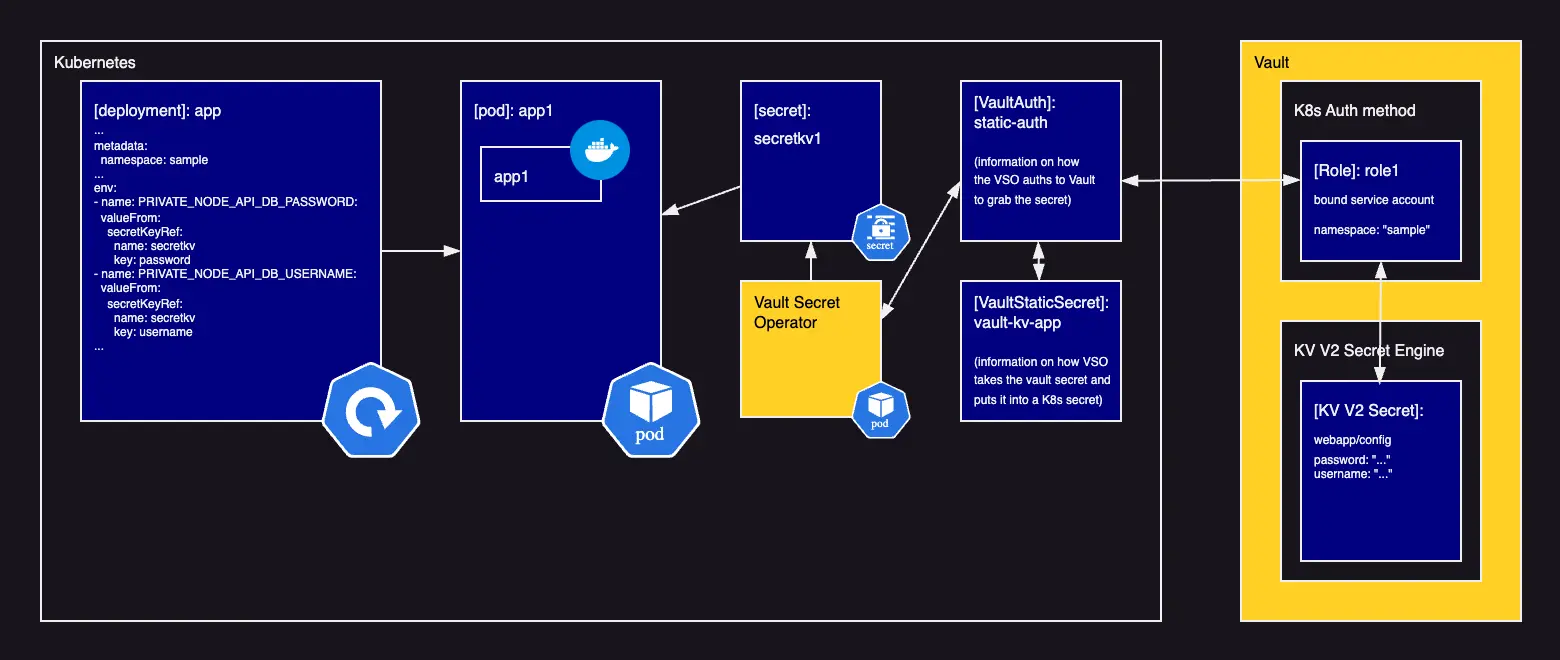

The newer way of keeping secrets in Vault synced with workloads is to have a Vault Secret Operator, this workload watches for changes to secrets in Vault and automates the update of those values in native Kubernetes secret objects which can be picked up by workloads in pods.

This diagram shows how the Vault Secret Operator creates direct secrets that are picked up by the pods. The pods have less complexity as there's only the app containers needed without an additional sidecar for secret updating.

On larger clusters, having multiple replicas for High Availability may mean 3+ instances running, but that's way less resources than one for each pod. This creates n containers and n+1 pods (unless scaling for HA). On small clusters this might use more resources than injector, but quickly shows benefits at scale.

This diagram shows as we scale to three applications, the amount of containers only increases linearly.

While Vault Secret Operator seems more complex with additional boxes, it's really extra configuration and it uses less resources overall with less active running workload.

For this series we'll be leveraging the newer Vault Secret Operator.

Pod restarts after secrets change

While working with Vault you'll notice that changing the secret in Vault leads to a change in the Kubernetes secret, but when visiting /config you'll notice that the API doesn't pick up the latest secret. While locally setting up a workflow that changes the secrets and restarts the pods would be a better workflow, it leads to issues in production where someone might launch a new secret that triggers a redeploy that breaks applications. It's better to have to manually delete a pod for it to get recreated with the new secret.

This post speaks to Kustomize SecretGenerator which creates new names of the secrets every time they are updated forcing a redeployment. It also requires the previous injector method of loading secrets as a mounted file within the app container. There's also other options like Reloader.

Controlling when pods are relaunched to pickup secret changes is key especially in higher environments and requires operational/process consideration.

Vault hands on

Let's dig into the actual project

Installing Vault

From the folder shared/vault/_devops run the command deploy.sh to deploy Vault with Helm to the sample-vaultnamespace.

Unsealing Vault

When vault starts up it gets initialized which provides unseal keys and a root token. Three of these unseal keys are needed to unseal a Vault instance. The root token is required to auth with the clients (web, CLI, or API).

In Kubernetes, Vault pods will report as not ready until they are initialized and unsealed; in this case it's a manual process.

Connect to the vault container

kubectl exec -it -n sample-vault sample-vault-0 -- /bin/shInitialize the vault

While connected to the vault instance:

vault operator initThis will provide a listing of 5 unseal keys and a root token similar to:

1Unseal Key 1: /iUiAI/W22QVsCTyyMHAzTdS1t3+lYeiEpbiyB20kh5e 2Unseal Key 2: WXducak7AxmTvu1o/fY1yseRx5YtBxobwa0gu5wtskqT 3Unseal Key 3: y7GTlvPQokP73BC4CJZqzd8YInKOtOEs+DtcNjkG/UAF 4Unseal Key 4: 6QRErTGTswuo3xRRMThXGjoeGThwUkF2k2RI2Qqatm7Z 5Unseal Key 5: /3NKjziRYurzQuXoin4FuftsNH9QrpfrB97vYkkjIoNp 6 7Initial Root Token: hvs.6mDRwBFsvkPfkH0uLAKISbkF 8 9Vault initialized with 5 key shares and a key threshold of 3. Please securely 10distribute the key shares printed above. When the Vault is re-sealed, 11restarted, or stopped, you must supply at least 3 of these keys to unseal it 12before it can start servicing requests. 13 14Vault does not store the generated root key. Without at least 3 keys to 15reconstruct the root key, Vault will remain permanently sealed! 16 17It is possible to generate new unseal keys, provided you have a quorum of 18existing unseal keys share. See the vault operator rekey for more information.Write these down/store them somewhere safe.

Run the unseal command x3

While connected to the vault instance:

vault operator unsealWhile one can

vault operator unseal [key], but this is less preferred because it puts the key in one's shell history which should be avoided.Run this 3x and each time when asked for an unseal token provide a different unseal token of the 5, after each attempt the output will let you know 1 of 3, 2 of 3, or 3 of 3 were successful in unsealing.

See output like:

1/ $ vault operator unseal 2Unseal Key (will be hidden): 3Key Value 4--- ----- 5Seal Type shamir 6Initialized true 7Sealed true 8Total Shares 5 9Threshold 3 10Unseal Progress 1/3 11Unseal Nonce e7490491-f64d-413d-98f1-a5739054faa5 12Version 1.17.2 13Build Date 2024-07-05T15:19:12Z 14Storage Type file 15HA Enabled false 16 17/ $ vault operator unseal 18Unseal Key (will be hidden): 19Key Value 20--- ----- 21Seal Type shamir 22Initialized true 23Sealed true 24Total Shares 5 25Threshold 3 26Unseal Progress 2/3 27Unseal Nonce e7490491-f64d-413d-98f1-a5739054faa5 28Version 1.17.2 29Build Date 2024-07-05T15:19:12Z 30Storage Type file 31HA Enabled false 32 33/ $ vault operator unseal 34Unseal Key (will be hidden): 35Key Value 36--- ----- 37Seal Type shamir 38Initialized true 39Sealed false 40Total Shares 5 41Threshold 3 42Version 1.17.2 43Build Date 2024-07-05T15:19:12Z 44Storage Type file 45Cluster Name vault-cluster-b2f63ed2 46Cluster ID 98bb77f3-98b0-66e0-da09-8d5d7961d092 47HA Enabled falseDisconnect from container shell

Then use

exitto disconnect from the sh session

These steps need to be done on each of the Vault instances if running high availability, but in our case we are using a single instance.

Auto Unsealing Vault

When Vault gets rebooted, it starts up sealed and requires the manual process of unsealing. One can use an automated process to unseal, but it requires using an external auth mechanism for each Vault instance to unseal. This is desired for High Availability instances, so that when they come online they can auto unseal and begin updating secrets.

As that approach requires additional external resources/cost it's not covered here. This cost should be negligible for enterprises and worth the configuration.

Creating secret/user/policy structure

We'll create a key/value pair set of env secrets that map to the below structure along with users that have policies for read/write or just read.

1kvv2/

2 sample/

3 (sample-admin has read/write access to below tree)

4

5 sample-node-api/

6 (sample-node-api-admin has read/write access to below tree)

7 (sample-node-api-user has read only access to below)

8

9 sample-node-api-local/

10 PRIVATE_NODE_API_DB_USERNAME=""

11 PRIVATE_NODE_API_DB_PASSWORD=""

12 sample-node-api-dev/

13 PRIVATE_NODE_API_DB_USERNAME=""

14 PRIVATE_NODE_API_DB_PASSWORD=""

15 sample-node-api-qa/

16 PRIVATE_NODE_API_DB_USERNAME=""

17 PRIVATE_NODE_API_DB_PASSWORD=""

18 sample-node-api-stg/

19 PRIVATE_NODE_API_DB_USERNAME=""

20 PRIVATE_NODE_API_DB_PASSWORD=""

21 sample-node-api-prod/

22 PRIVATE_NODE_API_DB_USERNAME=""

23 PRIVATE_NODE_API_DB_PASSWORD=""

24

25 sample-python-api/

26 (sample-python-api-admin has read/write access to below tree)

27 (sample-python-api-user has read only access to below)

28

29 sample-python-api-local/

30 PRIVATE_PYTHON_API_DB_USERNAME=""

31 PRIVATE_PYTHON_API_DB_PASSWORD=""

32 sample-python-api-dev/

33 PRIVATE_PYTHON_API_DB_USERNAME=""

34 PRIVATE_PYTHON_API_DB_PASSWORD=""

35 sample-python-api-qa/

36 PRIVATE_PYTHON_API_DB_USERNAME=""

37 PRIVATE_PYTHON_API_DB_PASSWORD=""

38 sample-python-api-stg/

39 PRIVATE_PYTHON_API_DB_USERNAME=""

40 PRIVATE_PYTHON_API_DB_PASSWORD=""

41 sample-python-api-prod/

42 PRIVATE_PYTHON_API_DB_USERNAME=""

43 PRIVATE_PYTHON_API_DB_PASSWORD=""This can be created by changing to shared/vault/_devops/and running configure.sh to create auth types, storage engines, policies, users, roles, and secrets. It's partially idempotent: user passwords will not be changed if they exist, but secret username and passwords will be updated. The default passwords should be unique per user and can be reset using Vault UI to a value shared with the user. This promotes better password hygiene and less password sharing.

Later with helm, we'll pull in the specific secret for the env.

Note: it is likely that different user accounts/policies might be needed to prevent prod access to secrets by unauthorized users, for this series the same user can read all envs for that application. All the user roles shared/vault/_devops/vault/roles share the same policies sample-node-api-user or sample-python-api-user. These could be updated to reflect a specific env (dev, qa, stg, prod) and a specific Vault secret path (like sample/sample-node-api/sample-node-api-dev vs sample/sample-node-api/sample-node-api-qa).

Why are Python secrets in the list above?

The samples demonstrate how one could manage secrets for two different applications across different environments in the same Vault. The roles that are created have both admin (read/write) and user (just read) roles to manage what accounts can perform. Try to update the secrets that are pulled in to be Python app secrets and you'll see it's unauthorized.

Maybe later we'll come back and introduce a python equivalent to the sample Node API application.

Configuring Vault

In the folder shared/vault/_devops/ run configure.sh to configure the authentication methods, secret engines, and populate users, policies, roles, and secrets. It's got checks to only set passwords for brand new users (so it won't overwrite passwords of existing users) and secret value creation, it also changes the passwords every time it runs which needs evaluation for each scenario.

If objects already exist, the script may report errors for those, but it will fail on updating the existing items and keep running.

Note: Several attack vectors (CWE-335,CWE-336,CWE-337,CWE-338, the listgoes on look at weakness in random seeds that power the random character selection, so stronger password creation is advised, but left to the reader to implement; consult your security team.

Vault Secret Operator in practice

The Vault Secret Operator example leverages Vault CLI, but this series leverages Vault API which has most of the vault objects created with the configure.sh script shared/vault/_devops/configure.sh.

Deploying Vault Secret Operator

Once the vault is installed we can use the deploy.shscript in shared/vault-secrets-operator/_devops/ to deploy the Vault Secret Operator. Alternatively, we can use deploy.sh script at shared/_devops/ to install all the shared aspects in the correct order to install Vault and then Vault Secret Operator.

Vault Secret Operator requires a few configurations in place

There's the authentication method (Kubernetes), that has roles. Each role in Vault maps a Kubernetes namespace to a Kubernetes service account name to a Vault Policy.

On the Vault side

It requires that the related Vault Policies are available. Check shared/vault/_devops/vault/policies for examples that are applied by shared/vault/_devops/configure.sh

On the Kubernetes side

This requires that the Kubernetes service account is created application/sample-node-api/_devops/kubernetes/01-sample-node-api.vault-operator-sa.yaml in that namespace where the application lives.

Also the secret (like: sample-app-node-api-local-db-connection-secret in file application/sample-node-api/_devops/kubernetes/01-sample-node-api.secret.yaml) is created with default values including "TBD" (which crash the app until they are changed) needs to exist and then can be updated by Vault Secret Operator.

VSO specific files like:

application/sample-node-api/_devops/kubernetes/01-sample-node-api.vault-auth-static.yamlhelp operator map the service account to the role with the authentication connection.application/sample-node-api/_devops/kubernetes/01-sample-node-api.vault-static-secret.yamlhelp operator map the authentication method to the secret engine and the Kubernetes secret that should receive the updated Vault values.

Troubleshooting Vault?

Some of the error messages are a little vague and lacking detail about what exactly is going on, leveraging a tool like enabling the audit logs provides some great information about what's happening. Also leverage the events API kubectl events -n sample to get the high level issues at hand. Don't forget to leverage JWT decoders to dig into the tokens and see the username making the requests.

Resilience

Leveraging 3+ instances in a High Availability model helps when one instance might crash.

In this series, we are leveraging a single instance of Vault which is not resilient, but it makes lives easier for local development. One can also leverage other resources on how to deploy Vault for High Availability.

Performance

Leveraging a 3+ high availability nodes also helps from a performance perspective ensure there's enough workloads keeping secrets updated. The more secrets exist and are updated frequently the more replicas of VSO may be needed.

Security

Centralizing all secrets in one place is great, but it means putting all the secrets in one basket.. and that basket needs to be highly secured.

Putting Vault and Vault Secret Operator in their own namespaces is just the beginning, adding in granular users with minimal access provided by roles in Vault and also configuring the kubernetes service accounts, roles and RBAC, HTTPS, and locking down what networks expose the vault UI are critical to making sure this happens.

Wrap up

We've understood what Vault is, how to install it, unseal it, create secrets engines/users/roles/secrets and how to leverage Vault Secrets Operator to keep our deployments up to date with the latest secrets.

Moving along

Continue with the seventh article of the series: Helm and Environments

This series is also available with the accompanying codebase.

Stuck with setup? Refer to full project setup instructions.

Done with the series? Cleanup the project workspace.