Part 4: Containerization, Kubernetes Starter

Written August 23rd, 2024 by Nathan Frank

Photo source by BoliviaInteligente on Unsplash

Recap

This article picks up from the third article: Application Starter in the Kubernetes Starter series.

Know about the value Containerization brings? Skip to Kubernetes and Orchestration.

Containerization concepts

What's a container?

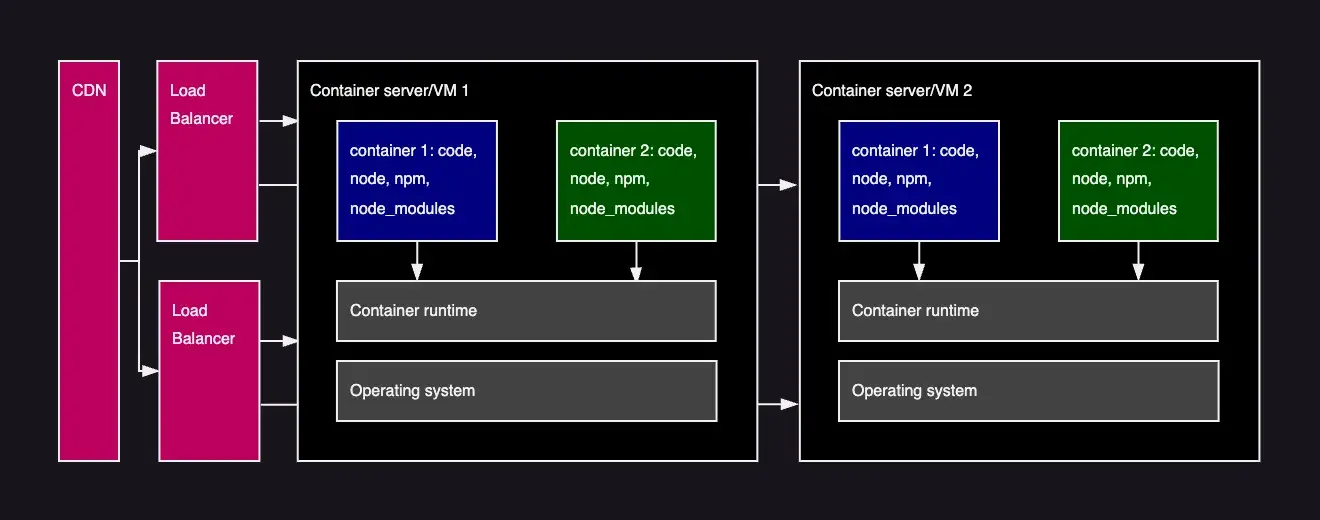

We've already talked about Containerization in Why Kubernetes, but it's a method of creating container images and then being able to run that exact version of the container multiple times.

It's become industry standard as it:

- Resolves the "works on my machine" issue to work everywhere.

- Contains all the dependencies needed to run the software

Our sample application as containers

This allows the operating systems and hardware to be updated independently of runtime and package dependencies the application require.

Containers under the hood

Layers

When creating a container you don't start from scratch, you begin from some official image that already has most of what you need and you add to it. It's important to know what's in that image that you are starting from #security.

Every (well most of them) command that exists in a Dockerfile that is executed as part of a docker build creates a new layer on top of a previous layer.

Need a web server to host your files? Don't build one from scratch, use the official nginx image and layer in your specific files that need to be hosted.

Registries

Container images, especially those official images are hosted in container image registries and they are hosted as layers. If you locally already have image A which requires 10 layers and image B needs 6 layers, 4 of which are shared with image A, pulling image B from the registry will only pull the remaining layers needed after the initial 4.

Initially Docker Hub was the only way to interface with container image registries, but now it's standard. Github, Gitlab, Nexus (platform or open source), Artifactory are just a few or host your own docker registry.

You build a container image, tag it and push it to a registry (assuming you have access). Then others (or CI/CD infra that have access) can pull those images and run them as needed.

Containers hands on

Let's dig into the actual project

A container runtime

The default standard for running containers historically has been Docker. Docker Desktop is a great tool that they offer (free for students and people learning) that helps understand and manage images and running containers.

In the enterprise world, the cost of Docker Desktop adds up quickly.

While Docker Desktop has added support for running K8s locally as well, another alternative is available: Rancher Desktop.

- It provides a drop in for Docker (install Rancher Desktop and get docker CLI that interfaces with the Rancher Desktop local image repo, runs containers in Rancher Desktop).

- It allows updating and changing which version of K8s is running (test in new K8s versions locally).

- It's free and open source.

If you are learning a new tool why not choose one that's open source? While this series will assume that Rancher Desktop is installed locally using moby and that the default traefik is enabled (although nginx-ingress would also work), we'll use kubectl commands which would apply whichever K8s provider is used.

Note there is a difference between docker cli and nerdctl cli which has to do with containerd. The most noted difference is that nerdctl doesn't contain Docker's docker system prune -a and contains other containerd commands.

There's also Podman which is worth looking at as well. I've been using Rancher Desktop since Docker changed their licensing fees and it's served me well.

A moment for open source

If something is free and open source, then we can all use it without any regard for the effort the community is putting into those tools, right? Wrong. We've all seen the problems that happen due to supply chain attacks that may have been avoided if companies with money supported people who make the tools they use.

Consider talking with your leadership about sponsoring an open source project, getting a potential tax break, and getting your company's logo on a support page.

The Dockerfile file

The Dockerfile sets up the steps to take a base image and then layer additional files and actions on top to produce a container image that can be instantiated multiple times.

1# application/sample-node-api/Dockerfile

2

3# allow the version of node to be input/configured

4ARG NODE_VERSION

5FROM node:$NODE_VERSION

6

7# make the folder and ensure it's owned by the right user/group

8RUN mkdir -p /home/node/app/node_modules && chown -R node:node /home/node/app

9

10# set the working directory to the node app directory

11WORKDIR /home/node/app

12

13# copy over the package.json and package-lock.json files

14COPY --chown=node:node package*.json ./

15

16# set the user that the node app will run as

17USER node

18

19# perform the installation of dependencies

20RUN npm install

21

22# copy over the source files and change ownership

23COPY --chown=node:node . .

24

25# notate the port that gets exposed

26EXPOSE 3000

27

28# the command to run when starting up the container

29CMD [ "node", "src/app.js" ]

30The .dockerignore file

Similar to a .gitignore a .dockerignore file file tells what files Docker should not include during a build.

1# application/sample-node-api/.dockerignore

2.env*

3.DS_Store

4Dockerfile

5.dockerignore

6_devops

7node_modulesMultistage build files

Multistage builds are great for scenarios where one needs to have tools to build an application or perform a transform, but those tools are not needed in the final deployment.

One build phase could transpile a web ui app to js, while the second build phase hosts those static files from an nginx server. This reduces the attack surface plane of the container. Besides being better for security it makes for smaller images and less network traffic to deploy containers.

Building and Tagging containers

Looking at one of the build.sh files:

1#! /bin/bash

2

3cd "$(dirname "$0")"

4echo "****** application shared node api build starting ******"

5

6docker build ../ -t kubernetes-samples/sample-node-api -f ../Dockerfile --build-arg NODE_VERSION=20-alpine

7

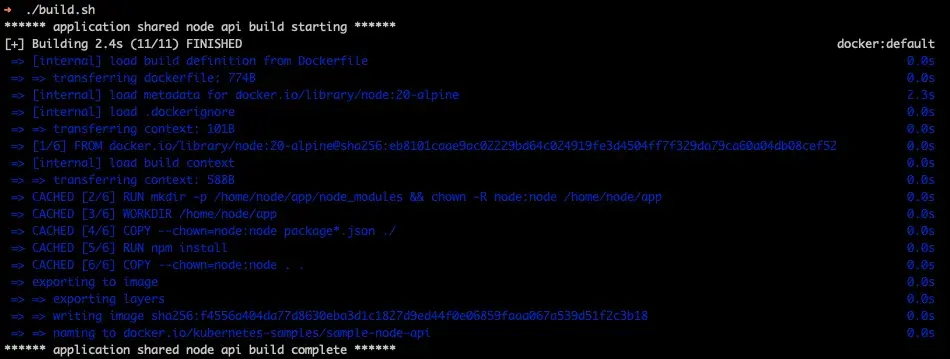

8echo "****** application shared node api build complete ******"Provides the following output:

Output of the sample node api build script shows we've added 6 layers to the image. Note the base image layers were already locally downloaded and the layers are showing cached as no changes have happened since we've run the build.

We can see that we are:

- running the

docker buildcommand -tadds a tag ofkubernetes-samples/sample-node-api-fadds the path to theDockerfileto use--build-argpasses an argument into the Dockerfile that is used during the build cycle

Note: I don't control the `kubernetes-samples` Dockerhub account, for this example everything is local so it doesn't matter.

There's also another build.sh file:

1# application/sample-nginx-web/_devops/build.sh

2#! /bin/bash

3

4cd "$(dirname "$0")"

5echo "****** application shared nginx web build starting ******"

6

7docker build ../ -t kubernetes-samples/sample-nginx-web:latest -f ../Dockerfile --build-arg NGINX_VERSION=1.27-alpine

8

9echo "****** application shared nginx web build complete ******"Which generates the following output:

Output of the sample nginx web build script shows there's only 2 layers created with this image. Again the base layers and added layers are cached with no recent changes.

The :latest tag

The :latest image tag can be tricky, it looks to always use the latest version of whatever image exists. While always being up to date, changes in the image may create breaking changes that prevent your images from running.

Also as the :latest tag is not unique, it's hard for docker systems to know if they have the latest image. For this reason it's highly recommended to tag images with a semver tagging process and update your CI/CD process to leverage the semver version number as part of the build and deploy.

For this Kubernetes starter, one needs to delete a deployment that contains an application image before redeploying otherwise the :latest version isn't picked up.

Running containers locally

These images are now built and in the local docker image registry.

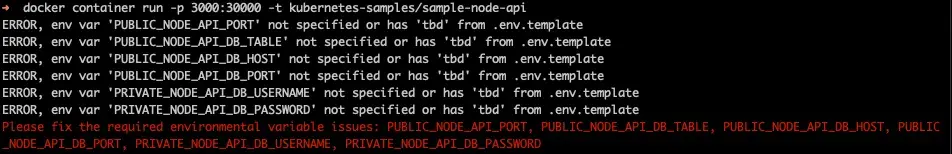

1docker container run -p 3000:30000 -t kubernetes-samples/sample-node-apiThis will startup the container and hitting http://localhost:30000/config should hit the endpoint that lists out the config and secrets.

But it doesn't, why not?

We specifically put in code into the application to log messages that env vars are required and missing and then exit.

While we could pass in env vars like this:

1docker container run -p 30000:3000 --env PUBLIC_NODE_API_PORT=3000 --env PUBLIC_NODE_API_DB_HOST=localhost --env PUBLIC_NODE_API_DB_PORT=5432 --env PUBLIC_NODE_API_DB_TABLE=sample_table --env PUBLIC_NODE_API_MODE=dev ...

2# note that I didn't put in the PRIVATE vars here, but they would need to be includedIt's better to point the command to a local file that's not being checked in to version control:

1# application/sample-node-api/_devops/deploy.sh

2#! /bin/bash

3

4cd "$(dirname "$0")"

5echo "****** application shared node api run starting ******"

6

7# does not work without env vars

8#docker container run -p 3000:30000 -t kubernetes-samples/sample-node-api

9

10# env vars file opens on port 9000

11

12docker container run -d --env-file ../.env -p 127.0.0.1:30000:9000 -t kubernetes-samples/sample-node-api

13

14echo "****** application shared node api run complete ******"

15Yes, placing secrets in an article is also secret leaking, and this is checked into the codebase which should be avoided in actual work.

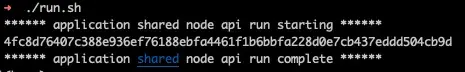

Running ./docker-run.sh provides output like:

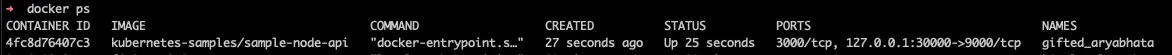

and we can see with docker ps that there's an instance running:

We can use http://localhost:30000/config to access the config API endpoint.

Stop the container with docker kill gifted_aryabhata where gifted_aryabhata is the name of the container as seen in the docker ps command.

A similar run command is also available at application/sample-nginx-web/_devops/docker-run.sh to run the standalone sample nginx web container. Another command application/sample-nginx-web/_devops/docker-stop.sh can shut down the container.

Using docker compose

Besides running manual commands to build and run docker containers, there's another method to manage containers. This is especially helpful when a workload requires multiple containers.

The way to do this is by creating a compose.yaml file and using docker compose up and docker compose down to run it. Sometimes using docker for standing up some quick containers is helpful, it's worth learning for sure. As this series is focused more on Kubernetes, we won't go into depth. There's also great resources out there.

Resilience

As containers are self-contained they are inherently portable (pushed and pulled through a container registry), so they can be stood up on other hosts as needed. One needs to build the application to allow for stateless horizontal scaling.

While one could leverage Docker swarm for managing replicas, we'll be using K8s coming up.

Performance

Applications running in containers have near real time performance as running on bare metal, but there's another performance consideration to keep in mind: developer performance.

When developing a Dockerfile it's important to understand there's a layering cache. If the first layer doesn't change because the starting image isn't changed, docker will use the latest cache layer. If the second command copies a file and it's the same, it can be cached. This allows engineers to leverage this approach to maximize caching in the building of their application which has build performance implications.

If layer 3 of 6 changes, 4, 5, and 6 need to be rebuilt. Choose the order of your commands wisely to leverage the cached during builds.

As the image creation process can take lots of time, it's important for engineers to optimize this step before it slows down every build; local and remote.

Security

Threat actors will try to break into applications and then pivot to see what other resources they get access to.

Least privileges

Most of the underlying container platform is abstracted away from users of containers, but many containers run on containerd. This allows for containers to not run as users without sudo access. Aggressors can escalate access and leverage sudo access to break out of a container.

Start from the least dependencies possible

If an aggressor gets into a container, the more tools they have at their disposal the more opportunity they have to exploit and laterally move across the system.

Run a dockerfile with a multipart build process that compiles the code, but only deploys the binary to a container. That way the compiler and build tools are not at the aggressor's use.

Use a container based on Alpine that has less of the out of the box linux commands that are commonly there.

Consider no package manager which means no installing of additional software.

Consider base images without wget or curl which makes it harder for an aggressor to download external files.

Volume mounts

Containers often mount file systems to store or access files. Make sure that the same service account isn't used for all the applications, make unique mounts just for what is needed. Consider making them read only or write only to prevent attackers from exfiltrating critical files.

Consider the blast radius

If an aggressor gets in, what ways could they move laterally and compromise other systems? What data do they have access to? What's the worst that could happen? Think defensively and work with your security team to ensure the right levels are met especially when Personally Identifiable Information (PII) or Personal Data are involved. Data privacy is everyone's responsibility.

This is far from the only security measures that should be considered, but are a few things that should be evaluated especially in the context of containerization.

Wrap up

We now have an understanding of how to take a basic application and get it running as a container. We've gotten the app built into a container image (_devops/build.sh), running as a container image (_devops/docker-run.sh), and stopped (_devops/docker-stop.sh).

Moving along

Continue with the fifth article of the series: Kubernetes and Orchestration

This series is also available with the accompanying codebase.

Stuck with setup? Refer to full project setup instructions.

Done with the series? Cleanup the project space.